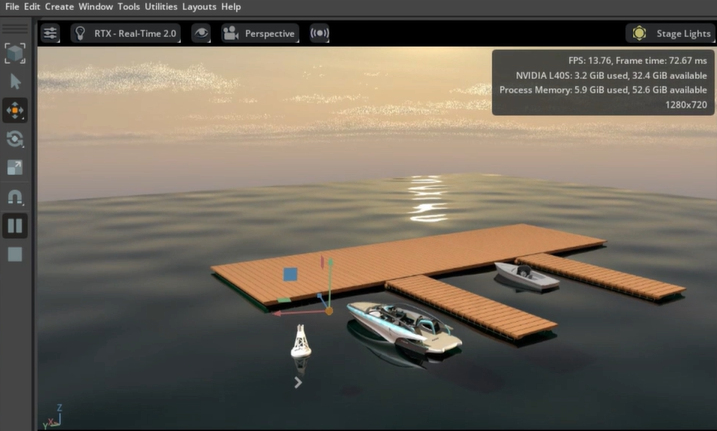

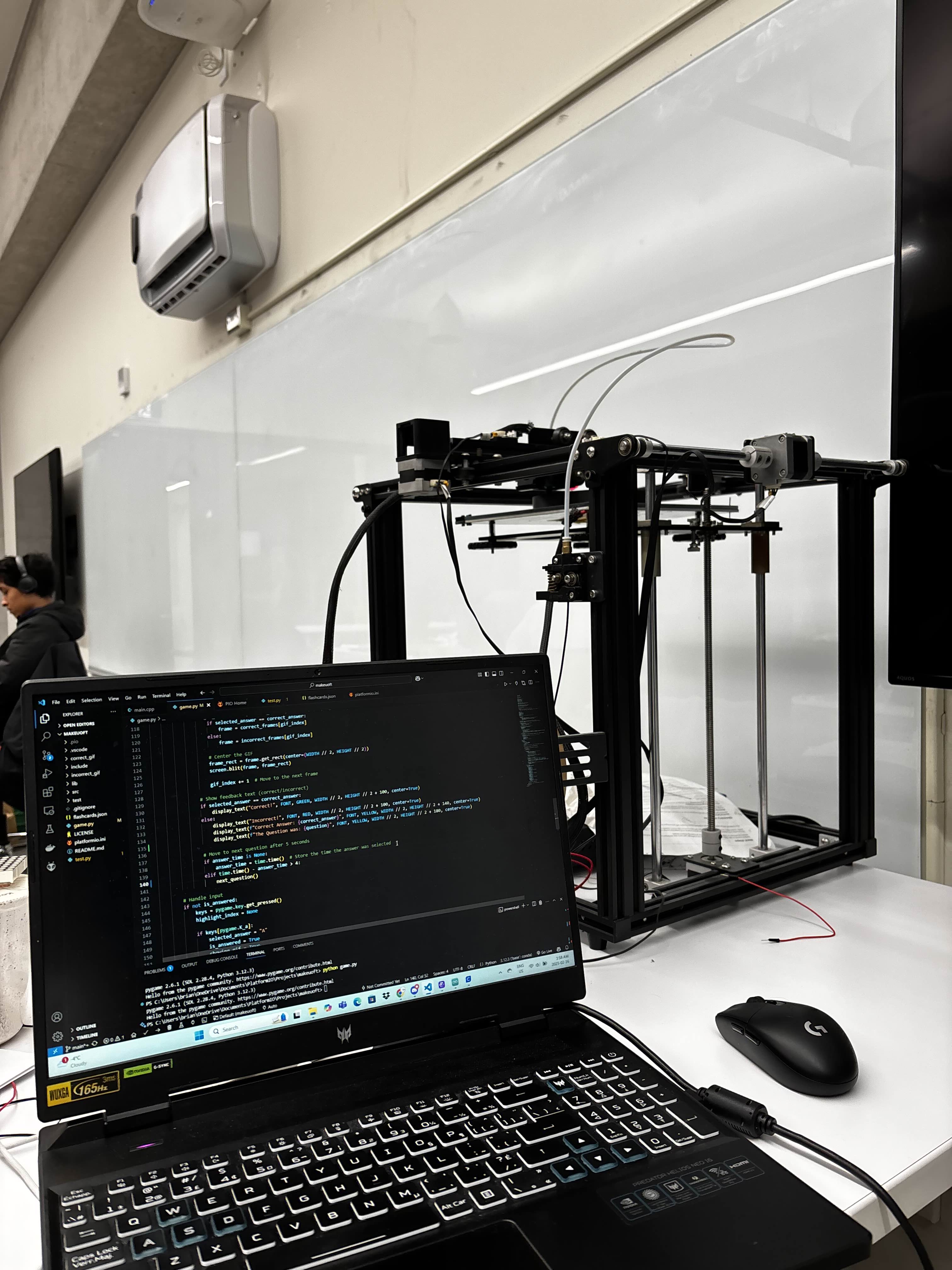

L4 Self-Driving Car

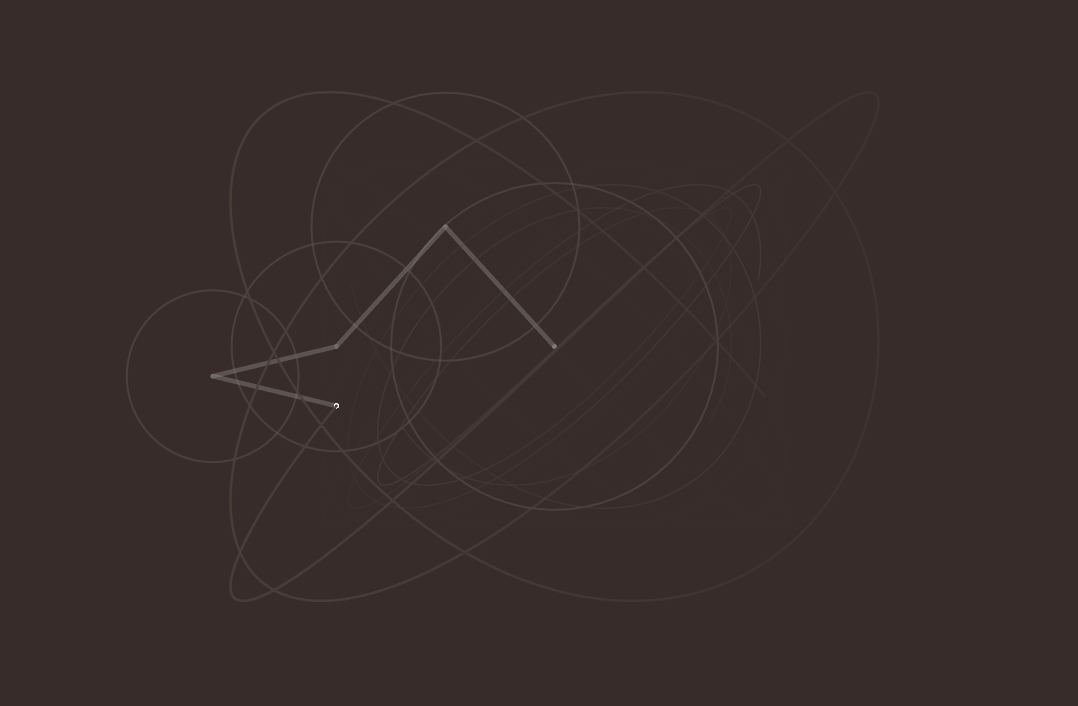

I lead the development of a Level 4 autonomous vehicle, with a strong emphasis on real-world deployment, safety, and research-grade perception. The project encompasses the full autonomy stack, including sensing, perception, localization, planning, and control, all built on ROS 2 and modern ML infrastructure.